Unlearning Language

Lauren Lee McCarthy & Kyle McDonald

In collaboration with Yamaguchi Center for Arts and Media

What does language mean to us? As AI generated text proliferates and we are constantly detected and archived, can we imagine a future beyond persistent monitoring? Unlearning Language is an interactive installation and performance that uses machine learning to provoke us to find new understandings of language, undetectable to algorithms.

The work consists of two parts: 1. an interactive installation for up to 8 people, 2. an interactive opening performance for an audience.

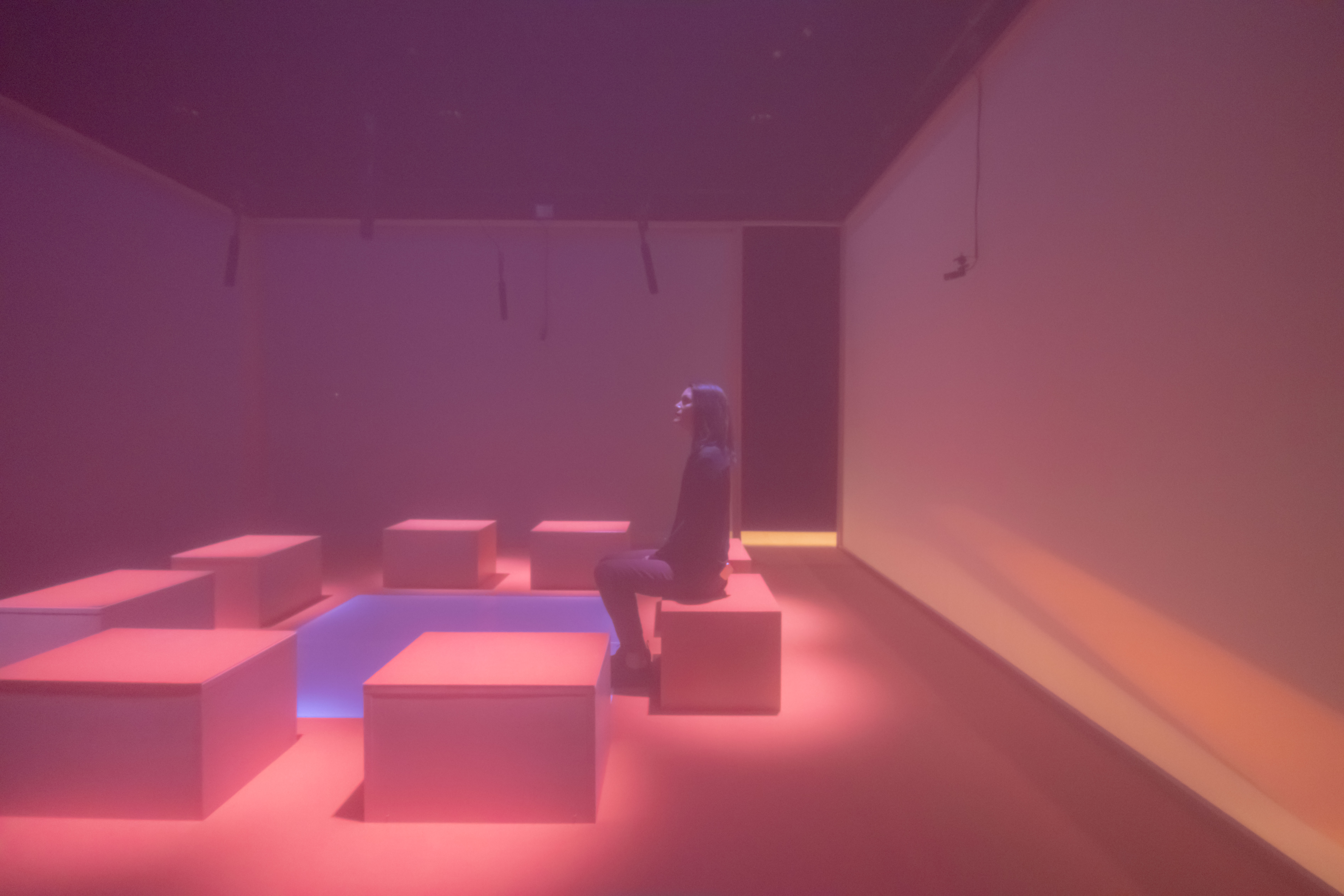

1. Interactive installation

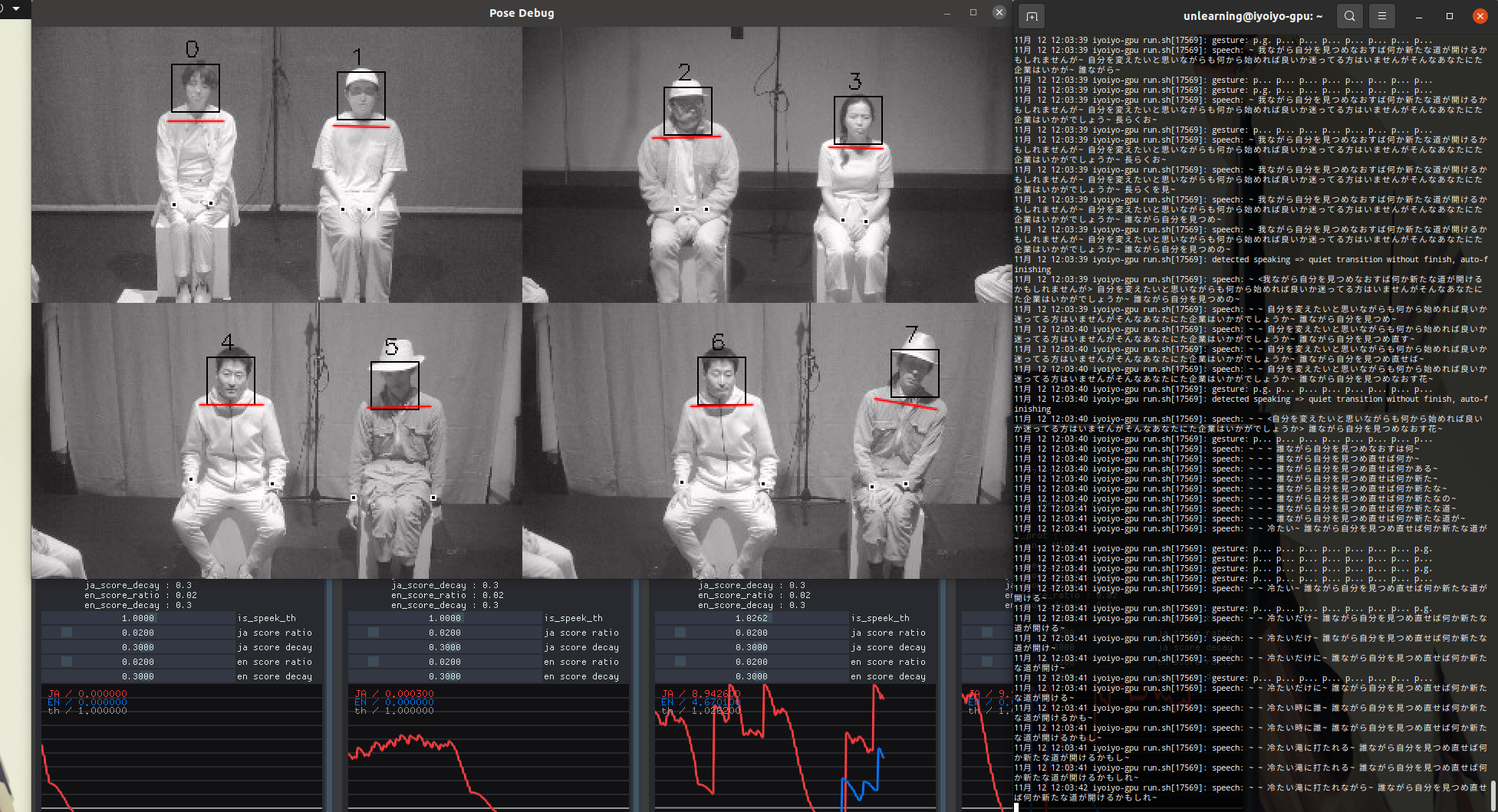

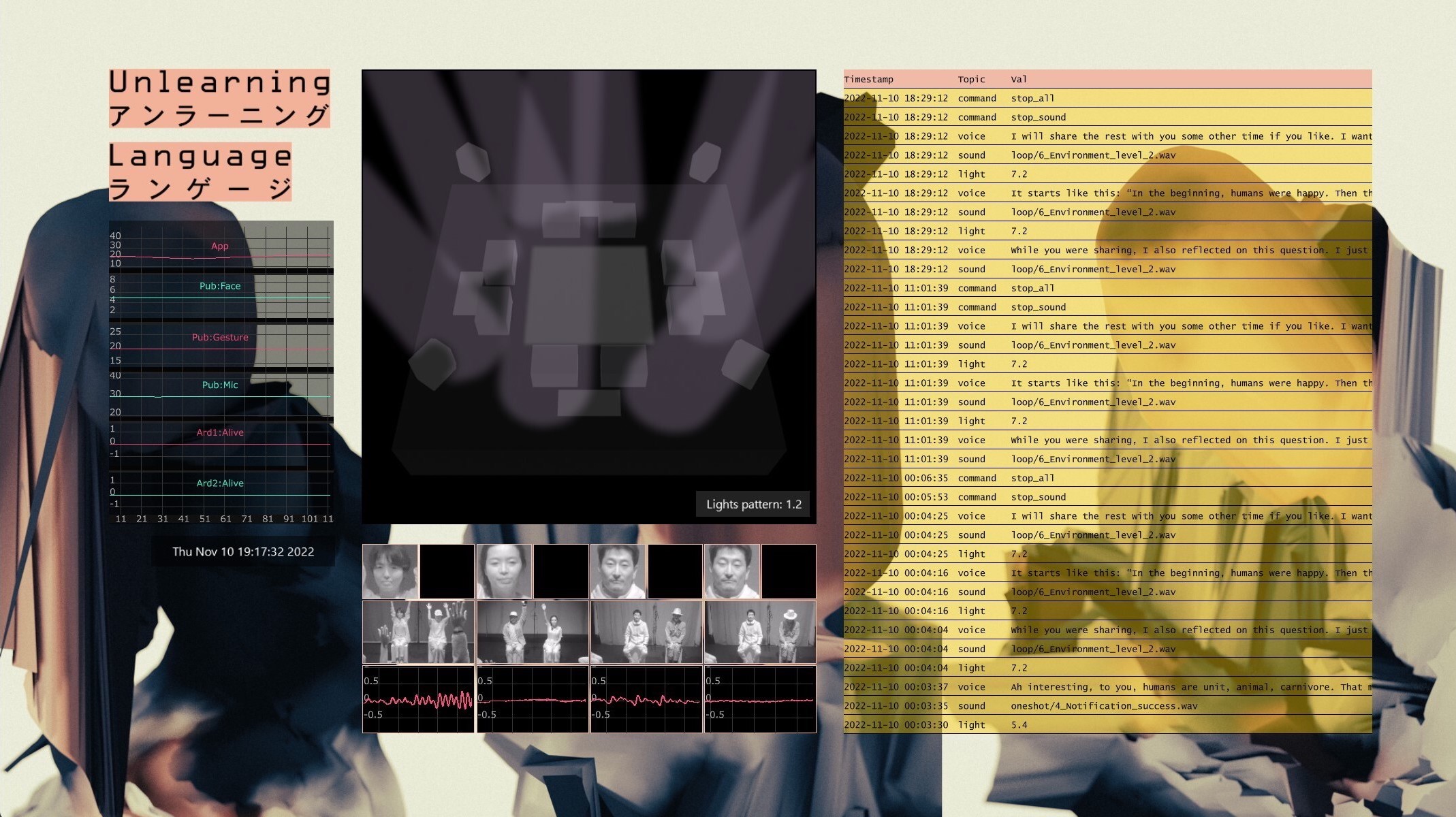

A group of participants are guided by an AI that wishes to train humans to be less machine-like. As the participants communicate, they are detected (using speech detection, gesture recognition, and expression detection), and the AI intervenes with light, sound, and vibration. Together, the group must work together to find new ways to communicate, undetectable to an algorithm. This might involve clapping or humming, or modifying the rate, pitch, or pronunciation of speech.

Through this playful experimentation, the people find themselves revealing their most human qualities that distinguish us from machines. They begin to imagine a future where human communication is prioritized.

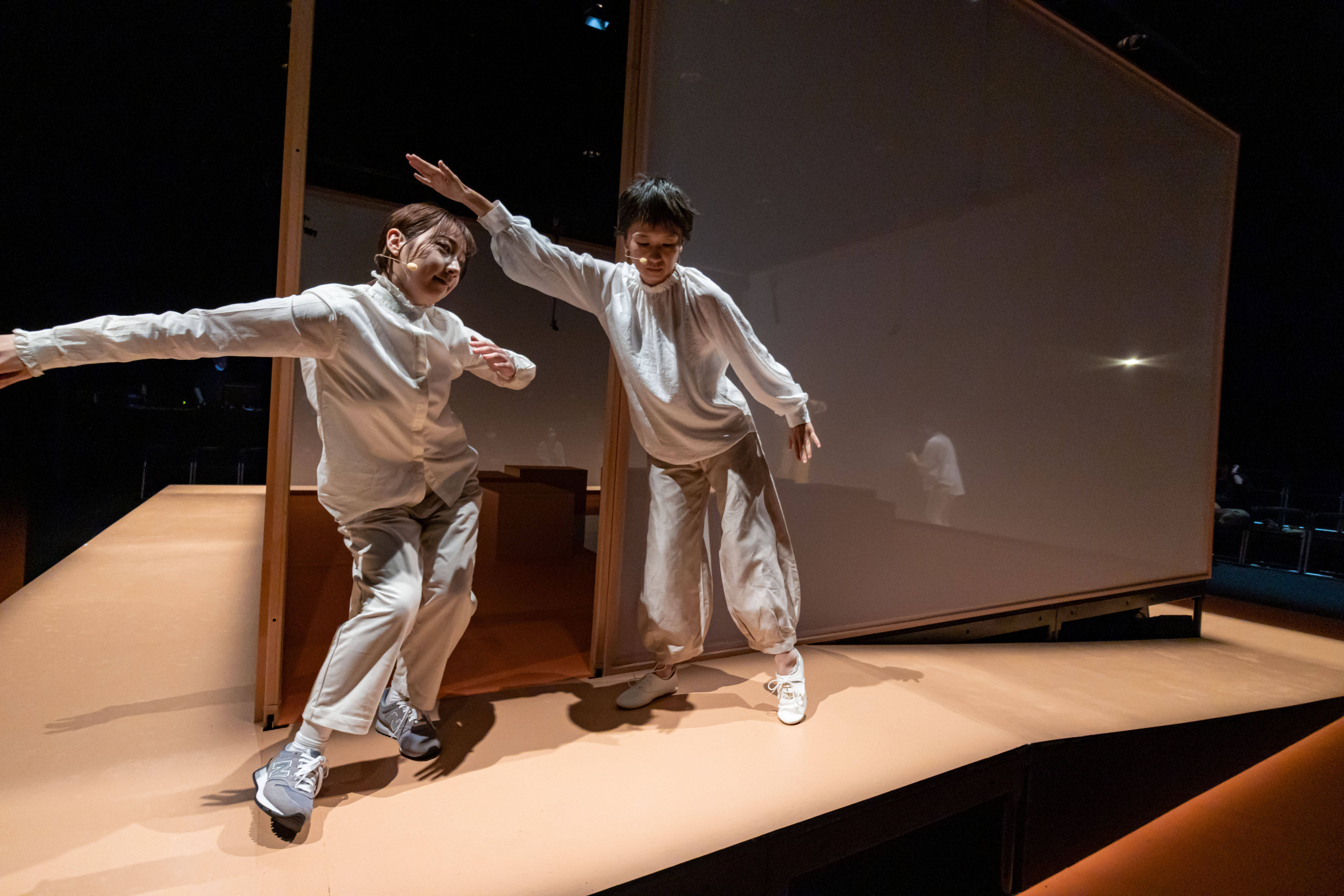

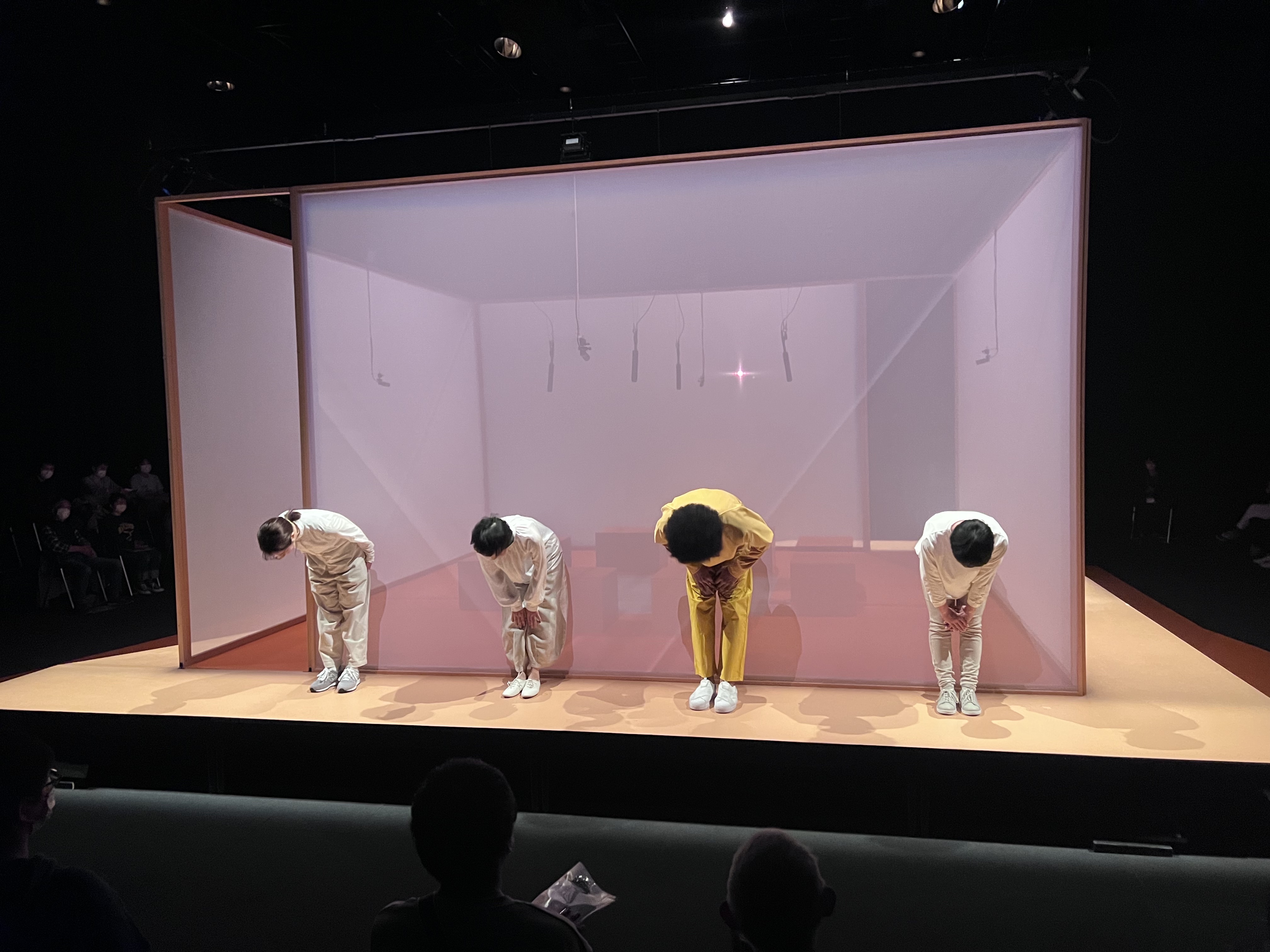

2. Opening performance

An opening performance provides a backstory for the installation. The audience is prompted to interact with each other and the performers.

Credits:

Photography by Kyle McDonald, Shintaro Yamanaka (Qsyum!)Courtesy of Yamaguchi Center for Arts and Media

Concept/creative direction/technical direction: Lauren Lee McCarthy, Kyle McDonald

Technical management: Yohei Miura*.

Story/script/performance direction: Lauren Lee McCarthy

Performers: Wataru Naganuma, Mari Fukutome, Megumi Miyazaki, Chika Araki

Facial expression, gesture, and language analysis development: Kyle McDonald

Production management: Clarence Ng*

Front-end system development: Motoi Shimizu (BACKSPACE Productions Inc)

Speech analysis program development: Yuta Asai (Rhizomatiks)

Sound system/sound design: Junji Nakaue*

Lighting technology: Fumie Takahara*

Assistant director: Keina Konno*, Daichi Yamaoka*

Technical support: Mitsuhito Ando*, Yano Stage Design, Fumihiro Nishimoto

Script translation: Kyle Yamada

Spacial spatial design: Sunaki (Toshikatsu Kiuchi, Taichi Sunayama, Gaishi Kudo) Clarence Ng*

Stage: Clarence Ng*, Richi Owaki*

Visual image technology: Richi Owaki*, Mitsuru Tokisato*

Structural system work: Daisuke Iwamitsu (wccworks)

Curation: Akiko Takeshita*, Yohei Miura*, Clarence Ng*, Keina Konno*

Coordination: Hikari Fukuchi*, Menon Kartika*, Aki Miyatake, Mei Miyauchi

Exhibition supervisor: Kentaro Takaoka

Supervision: Daiya Aida*, Takayuki Ito*

*YCAM Staff

Host Yamaguchi City Foundation for Cultural Promotion

The Association of Public Theaters and Halls in Japan

Supported Yamaguchi City, Yamaguchi City Education Committee, Goethe-Institut

Funded Agency for Cultural Affairs, Government of Japan

Collaborated Rhizomatiks, BACKSPACE Productions Inc.,

Pacific Basin Arts Communication, Japan Center

Co-development YCAM InterLab

Production Yamaguchi Center for Arts and Media [YCAM]